Recomputing ML GPU performance: AMD vs. NVIDIA

I am pretty impressed seeing Lisa Su doing her best to steer the AMD ship towards

better AI support in GPUs, with the Huggingface partnership and by convincing

George Hotz to submit more bug reports.

(For context, Hotz raised $5M to improve RX 7900 XTX support and sell a $15K

prebuilt consumer computer that runs 65B-parameter LLMs. A plethora of driver

crashes later, he almost gave up on AMD.)

There’s quite a few issues to overcome, though.

While that GPU is great

(Stable Diffusion iteration speed per GPU cost is top-tier),

a cursory study would be flawed:

public GPU benchmarks like TechPowerUp, TomsHardware, etc. give:

Where do the figures come from?

While there is no official breakdown,

only official figures, people widely compute it this way:

| Name | Price | Processors | Frequency | TFLOPS (FP16) | Perf/€ |

|---|

| RX 7900 XTX |

€1110 | 6144 | 2.5 GHz | 122.88 | .1107 |

| RX 7900 XT |

€942 | 5376 | 2.4 GHz | 103.22 | .1096 |

| RTX 4090 |

€1770 | 16384 | 2.52 GHz | 82.58 | .0467 |

| RTX 3060 |

€314 | 3584 | 1.78 GHz | 12.76 | .0405 |

| RTX 3080 |

€905 | 8704 | 1.71 GHz | 29.76 | .0329 |

| RTX 3090 |

€1500 | 10496 | 1.70 GHz | 35.68 | .0238 |

That is an unjust comparison, though, because AMD’s instruction is more niche

than FMA (hitting this performance sweet spot is thus uncommon),

and because both of those GPUs have other tricks up their sleeves,

yielding superior FLOPS.

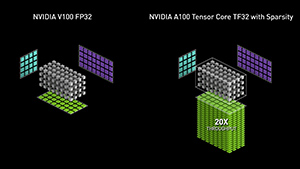

The big one on NVIDIA are Tensor cores.

With them, you can run an instruction that does

a 4×4 to 4×8 matrix multiplication (page 25)

in 1 cycle within a single Tensor Core (32 CUDA cores).

2×4^2×8 (matmul ops) ÷ 1 (cycles) = 256 ops/TC/cycle.

(There is some variation between NVIDIA GPUs

on which matrix sizes are supported and on how many cycles the instruction takes,

and NVIDIA keeps major aspects of their instruction set secret,

but on recent 30- and 40-series, this 256 number seems fairly constant.)

That actually puts the RTX 4090 at

256 × 512 (Tensor Cores) × 2.52 (GHz)

÷ 1K (GHz per teracycle/s) = 330 TFLOPS in FP16…

Much higher than the 123 TFLOPS that impressed Hotz on the RX 7900 XTX!

But AMD now has the same trick.

In RDNA3, with WMMA, the RX 7900 XTX has an instruction,

V_WMMA_F16_16X16X16_F16

that do two 16×16 matrix multiplications in 32 cycles,

in a single Compute Unit (two sets of 32 threads).

2×16^3 (matmul ops) × 2 ÷ 32 (cycles) = 512 ops/CU/cycle.

This uses the same underlying silicon circuits as V_DUAL_DOT2ACC_F32_F16:

the architecture lays out the matrices in Vector General-Purpose Registers.

Each cell of the output matrix is computed by multiplying

one row from input matrix A with one column from input matrix B,

two input cells at a time

(two adjacent input A row cells packed inside the same VGPR,

and two adjacent input B column cells packed together inside another VGPR),

so they can be used by the packed dot product single-cycle instruction.

Within that same instruction, encoded in VOPQ

(a SIMD-like system to execute one operation

on an even register while it executes on an odd one at the same time),

an adjacent output cell also multiplies through its first two input cells

at the same time using dual issue.

The input row has size 16, so those two output cells are completed in 8 cycles.

Each two adjacent output cells in their diagonal

are computed with 16 parallel threads (on separate stream processors)

within the same 8 cycles.

We have done two diagonals (32 output cells); there are 14 diagonals left.

Inside that Compute Unit, we still have 16 stream processors that we can use;

they can handle two more output diagonals within the same 8 cycles.

Once our first four diagonals are computed,

we sequentially compute the next 4 diagonals in the next 8 cycles.

So forth for the next 4, and the last 4 after that.

In total, we have computed the matrix multiplication

in 32 cycles, which checks out.

Why can’t we do the matrix multiplication in 16 cycles

by using all 64 threads inside of the Compute Unit?

Section 7.6 of the instruction set manual indicates:

[Dual issue] is legal only for wave32.

WMMA supports both wave32 and wave64, but it sounds like dual issue is

deactivated in wave64, and thus it would still take 32 cycles,

making it an ill-documentedly unfavorable proposition, I believe.

All in all, using WMMA, the RX 7900 XTX can crank through

512 × 96 (Compute Units) × 2.5 (GHz)

÷ 1K (GHz per teracycle/s) = 123 TFLOPS in FP16…

That ends up being less than half the performance of the RTX 4090.

The superior number of operations per Compute Unit is offset by the

crushingly lower number of cores.

Perhaps the AMD strategy is to have the better circuit ready

before migrating to the TSMC N5 (“5 nm”) process at a less affordable price.

In practice, the lower performance is less of an issue for AI training,

because they are famously limited in the amount of parallelization opportunities

(even the best training runs typically incur only 50% GPU use at a given time).

The VRAM bandwidth then matters a lot for large models,

and the RX 7900 XTX, despite using GDDR6 instead of GDDR6X,

has a higher bandwidth than the RTX 3090, thanks to its faster memory clock.

Still, it also is lower than the RTX 4090 on that front

(but at a lower price point).

| Name | Price | TFLOPS (FP16) | Memory bandwidth (GB/s) | RAM (GB) | TFLOPS/€ | Value (TFLOPS·GB·MB/s/€³) |

|---|

| RTX 4090 |

€1770 | 330 | 1008 | 24 | .186 | 1.4 |

| RTX 3060 |

€314 | 51 | 360 | 12 | .162 | 7.1 |

| RTX 3080 |

€905 | 119 | 760 | 10 | .131 | 1.2 |

| RX 7900 XTX |

€1110 | 123 | 960 | 24 | .111 | 2.1 |

| RX 7900 XT |

€942 | 103 | 800 | 20 | .109 | 2.0 |

| RTX 3090 |

€1500 | 143 | 936 | 24 | .095 | 1.0 |

(The value unit was edited per Scheurneus’ suggestion.)

Thus the RX 7900 XTX is not technically the best TFLOPS per price,

as was presumed in Hotz’s raise announcement.

But that metric is not crucial for the purpose of making LLM machines,

and purely looking at hardware, that GPU is a fine choice for that,

in part because it has a fairer RAM per dollar offer,

so that it can hold a large model without needing pricier GPUS,

yet likely reaching reasonable inference speeds.

The other thorns on the side of AMD in AI, though, rear their ugly heads:

- The compilers don’t produce great instructions;

- The drivers crash frequently: ML workloads feel experimental;

- Software adoption is getting there,

but kernels are less optimized within frameworks,

in particular because of the fracture between ROCm and CUDA.

When you are a developer and you need to write code twice,

one version won’t be as good, and it is the one with less adoption;

- StackOverflow mindshare is lesser. Debugging problems is thus harder,

as fewer people have encountered them.

(I will note, however, that the wealth of information provided by AMD

outshines that from NVIDIA tremendously,

even though they could better vulgarize those subtleties and

explain how to perform specific workloads like BERT training,

into which NVIDIA puts welcome care.

Just contrast NVIDIA’s matmul page to AMD’s.

AMD doesn’t even recognize its own flagship GPUs as supported for ROCm,

which is mindboggling coming from NVIDIA’s superior CUDA support.)

Comments on Reddit.